Total Pageviews

Sunday, 31 March 2019

Valve freezes updates on 'Artifact' to face 'deep-rooted' issues

Sega Genesis Mini will launch on September 19th with 40 games

DC Universe offers access to DC's full library of digital comics

Facebook COO says it's 'exploring' restrictions on who can go live

Google Store lists unannounced 'Nest Hub Max' 10-inch smart display

Apple cancels AirPower after more than a year of delays

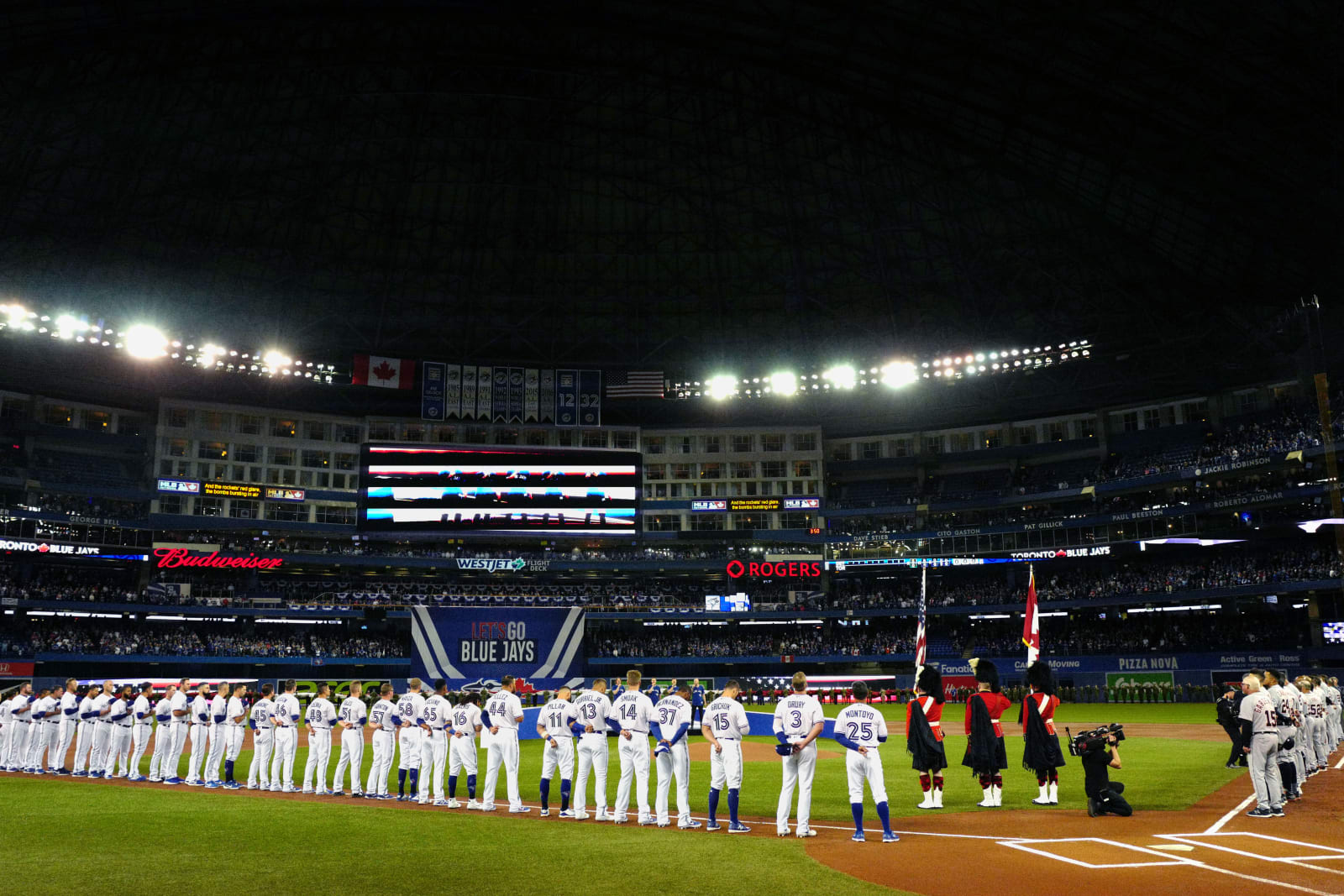

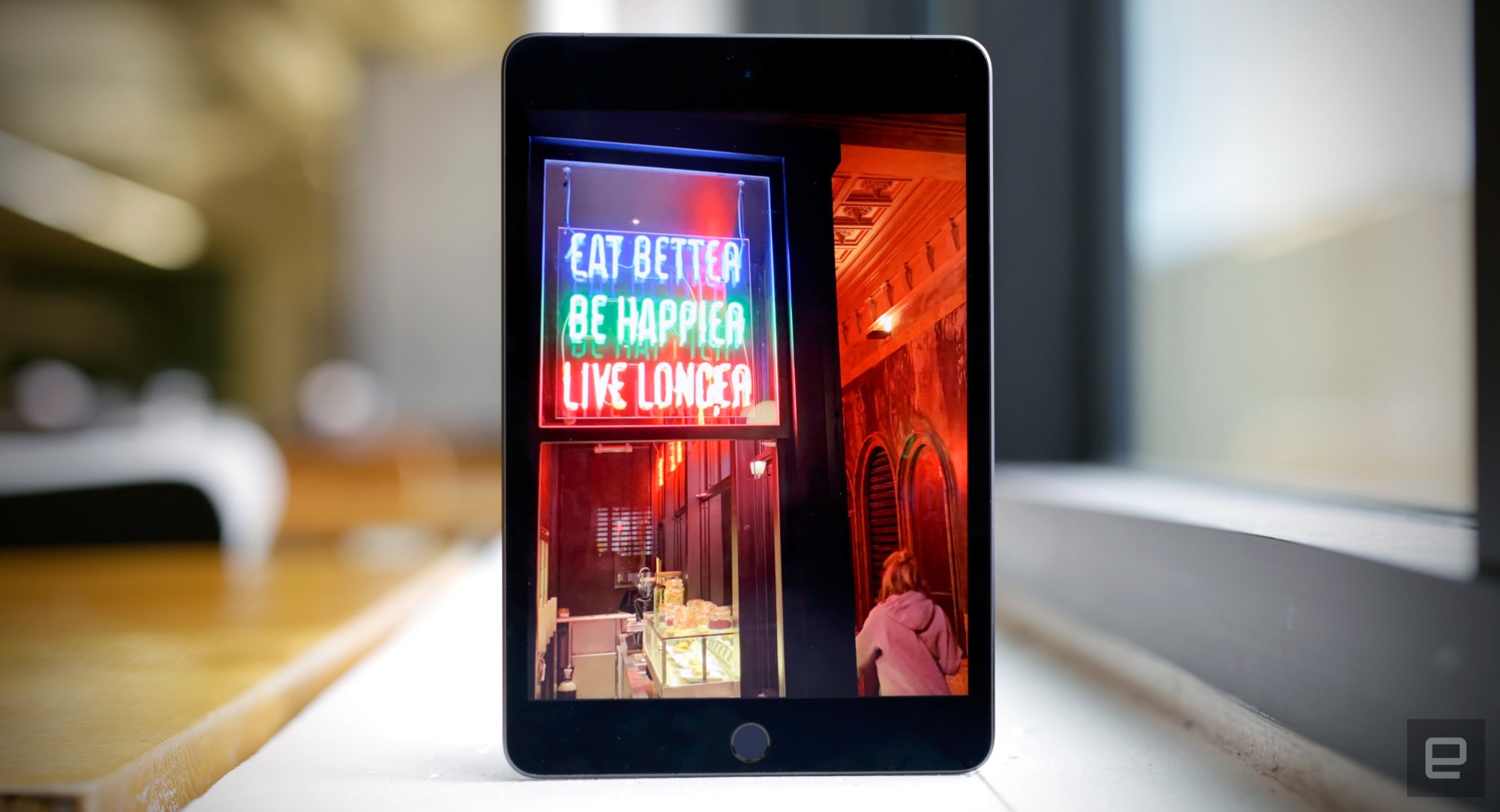

Apple iPad mini review (2019): Still the best small tablet

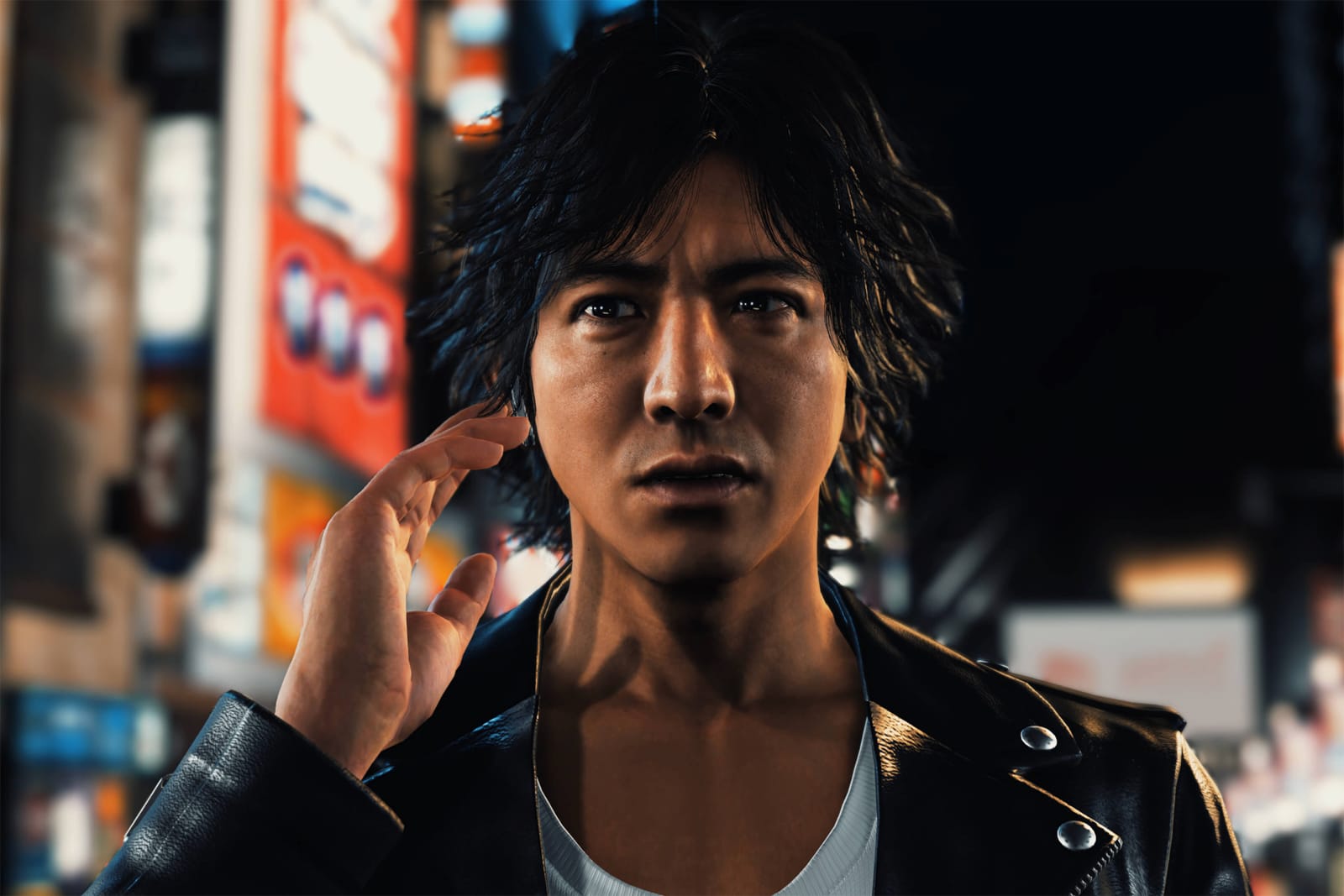

Sega will still release 'Judgment' worldwide despite actor's arrest

Apple sells wireless charging AirPods, cancels charger days later

“Works with AirPower mat”. Apparently not. It looks to me like Apple doesn’t treat customers with the same “high standard” of care it apparently reserves for its hardware quality. Nine days after launching its $199 wireless charging AirPods headphones that touted compatibility with the forthcoming Apple AirPower inductive charger mat, Apple has just scrapped AirPower entirely. It’s an uncharacteristically sloppy move for the “it just works” company. This time it didn’t.

Given how soon after the launch this cancellation came, there is a question about whether Apple knew AirPower was viable before launching the new AirPods wireless charging case on March 20th. Failing to be transparent about that is an abuse of customer trust. That’s especially damaging for a company constantly asking us to order newly announced products we haven’t touched when there’s always another iteration around the corner. It should really find some way to make it up to people, especially given it has $245 billion in cash on hand.

TechCrunch broke the news of AirPower’s demise. “After much effort, we’ve concluded AirPower will not achieve our high standards and we have cancelled the project. We apologize to those customers who were looking forward to this launch. We continue to believe that the future is wireless and are committed to push the wireless experience forward,” said Dan Riccio, Apple’s senior vice president of Hardware Engineering in an emailed statement today.

That comes as a pretty sour surprise for people who bought the $199 wireless charging AirPods that mention AirPower compatibility or the $79 standalone charging case with a full-on diagram of how to use AirPower drawn on the box.

Apple first announced the AirPower mat in 2017 saying it would arrive the next year along with a wireless charging case for AirPods. 2018 came and went. But when the new AirPods launched March 20th with no mention of AirPower in the press release, suspicions mounted. Now we know that issues with production, reportedly due to overheating, have caused it to be canceled. Apple decided not to ship what could become the next Galaxy Note 7 fire hazard.

The new AirPods with wireless charging case even had a diagram of AirPower on the box. Image via Ryan Jones

There are plenty of other charging mats that work with AirPods, and maybe Apple will release a future iPhone or MacBook that can wirelessly pass power to the pods. But anyone hoping to avoid janky third-party brands and keep it in the Apple family is out of luck for now.

Thankfully, some who bought the new AirPods with wireless charging case are still eligible for a refund. But typically if you get an Apple product personalized with an engraving (I had my phone number laser-etched on my AirPods since I constantly lose them), there are no refunds allowed. And then there are all the people who bought Apple Watches, or iPhone 8 or later models who were anxiously awaiting AirPower. We’ve asked Apple if it will grant any return exceptions.

Combined with an apology for the disastrously fragile keyboards on newer MacBooks, an apology over the Mac Pro, an apology for handling the iPhone slowdown messaging wrong, Apple’s recent vaporware services event where it announced Apple TV+ and Arcade despite them being months from launch, and now an AirPower apology and cancellation, the world’s cash-richest company looks like a mess. Apple risks looking as unreliable as Android if it can’t get its act together.

from Gadgets – TechCrunch https://ift.tt/2V3cbxq

via IFTTT

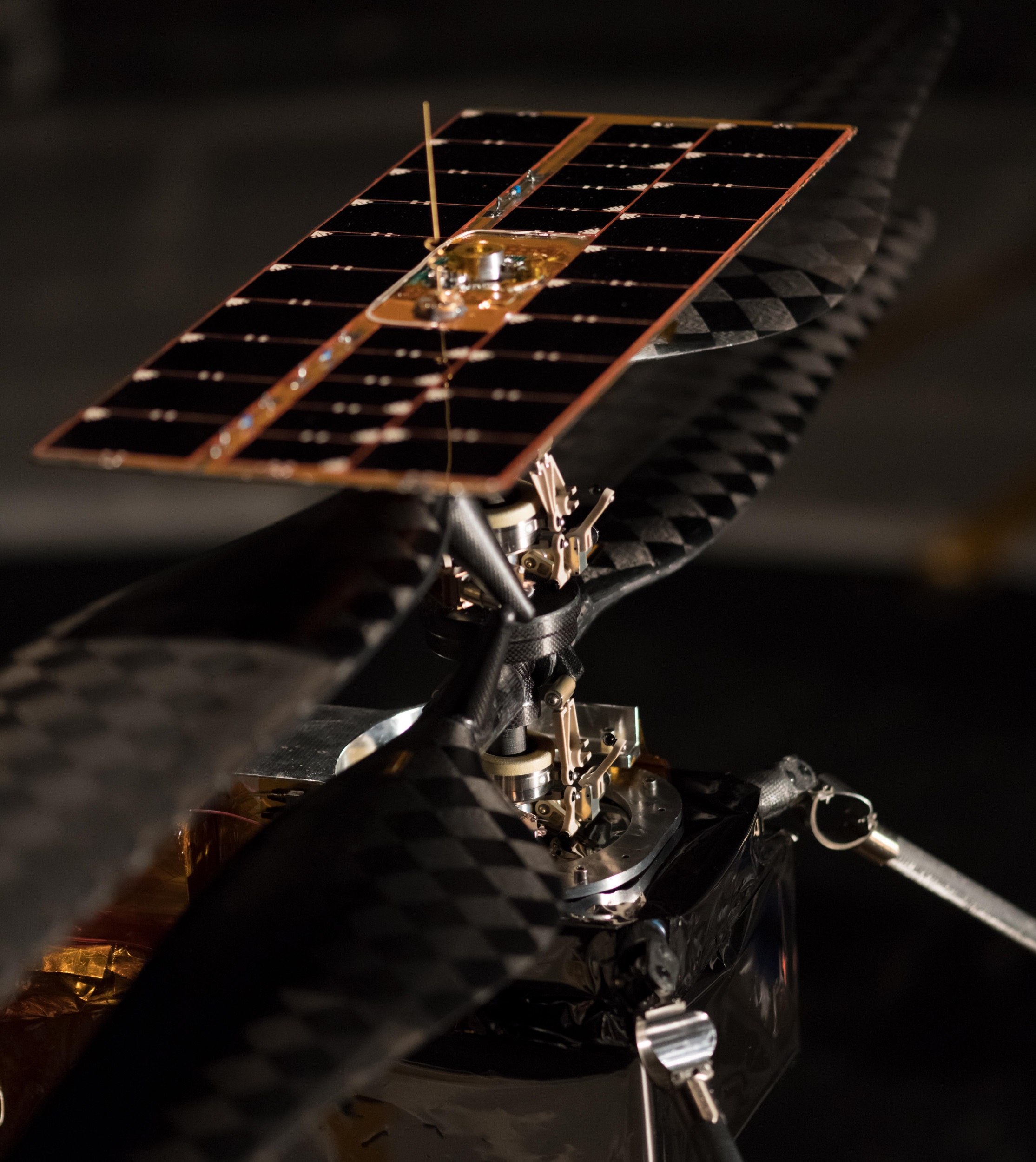

Mars helicopter bound for the Red Planet takes to the air for the first time

The Mars 2020 mission is on track for launch next year, and nesting inside the high-tech new rover heading that direction is a high-tech helicopter designed to fly in the planet’s nearly non-existent atmosphere. The actual aircraft that will fly on the Martian surface just took its first flight and its engineers are over the moon.

“The next time we fly, we fly on Mars,” said MiMi Aung, who manages the project at JPL, in a news release. An engineering model that was very close to final has over an hour of time in the air, but these two brief test flights were the first and last time the tiny craft will take flight until it does so on the distant planet (not counting its “flight” during launch).

“Watching our helicopter go through its paces in the chamber, I couldn’t help but think about the historic vehicles that have been in there in the past,” she continued. “The chamber hosted missions from the Ranger Moon probes to the Voyagers to Cassini, and every Mars rover ever flown. To see our helicopter in there reminded me we are on our way to making a little chunk of space history as well.”

A helicopter flying on Mars is much like a helicopter flying on Earth, except of course for the slight differences that the other planet has a third less gravity and 99 percent less air. It’s more like flying at 100,000 feet, Aung suggested.

The test rig they set up not only produces a near-vacuum, replacing the air with a thin, Mars-esque CO2 mix, but a “gravity offload” system simulates lower gravity by giving the helicopter a slight lift via a cable.

It flew at a whopping two inches of altitude for a total of a minute in two tests, which was enough to show the team that the craft (with all its 1,500 parts and four pounds) was ready to package up and send to the Red Planet.

“It was a heck of a first flight,” said tester Teddy Tzanetos. “The gravity offload system performed perfectly, just like our helicopter. We only required a 2-inch hover to obtain all the data sets needed to confirm that our Mars helicopter flies autonomously as designed in a thin Mars-like atmosphere; there was no need to go higher.”

A few months after the Mars 2020 rover has landed, the helicopter will detach and do a few test flights of up to 90 seconds. Those will be the first heavier-than-air flights on another planet — powered flight, in other words, rather than, say, a balloon filled with gaseous hydrogen.

The craft will operate mostly autonomously, since the half-hour round trip for commands would be far too long for an Earth-based pilot to operate it. It has its own solar cells and batteries, plus little landing feet, and will attempt flights of increasing distance from the rover over a 30-day period. It should go about three meters in the air and may eventually get hundreds of meters away from its partner.

Mars 2020 is estimated to be ready to launch next summer, arriving at its destination early in 2021. Of course, in the meantime, we’ve still got Curiosity and Insight up there, so if you want the latest from Mars, you’ve got plenty of options to choose from.

from Gadgets – TechCrunch https://ift.tt/2HN05VZ

via IFTTT

Canon takes on Fuji with new instant-print CLIQ cameras

Instant print cameras have been popular for a long, long time, but they’re seeing a renaissance now — led by Fujifilm, whose Instax mini-films and cameras lead the pack. But Canon wants in, and has debuted a pair of new cameras to challenge Fuji’s dominance — but their reliance on digital printing may hold them back.

The cameras have confusing, nonsensical names in both the U.S. and Europe: Here, they’re the IVY CLIQ and CLIQ+, while across the pond it’s the Zoemini C and S. Really now, Canon! But the devices themselves are extremely simple, especially if you ignore the cheaper one, which you absolutely should do.

The compact CLIQ+ has a whole 8 megapixels on its tiny sensor, but that’s more than enough to send to the 2×3″ Zink printer built into the camera. The printer can store up to ten sheets of paper at once, and spits them out in seconds if you’re in a hurry, or whenever you feel like it if you want to tweak them, add borders, crop or do duplicates, and so on. That’s all done in a companion app.

And herein lies the problem: Zink prints just aren’t that good. They cost less than half of what Instax Mini do per shot (think a quarter or so if you buy a lot) — but the difference in quality is visible. They’ve gotten better since the early days when they were truly bad, but the resolution and color reproduction just isn’t up to instant film standards. Instax may not be perfect, but a good shot will get very nice color and very natural-looking (if not tack-sharp) details.

And herein lies the problem: Zink prints just aren’t that good. They cost less than half of what Instax Mini do per shot (think a quarter or so if you buy a lot) — but the difference in quality is visible. They’ve gotten better since the early days when they were truly bad, but the resolution and color reproduction just isn’t up to instant film standards. Instax may not be perfect, but a good shot will get very nice color and very natural-looking (if not tack-sharp) details.

The trend towards instant printing is also at least partly a trend towards the purely mechanical and analog. People tired of taking a dozen shots on their phone and then never looking at them again are excited by the idea that you can leave your phone in your bag and get a fun photographic keepsake, no apps or wireless connections necessary.

A digital camera with a digital printer that connects wirelessly to an app on your smartphone may not be capable of capitalizing on this trend. But then again, they could be a great cheap option for the younger digital-native set and kids who don’t care about image quality, have no affinity for analog tech, and just want to print stickers for their friends or add memes to their shots.

The $160 CLIQ+, or Zoemini S, has a ring flash as well as the higher megapixel count of the two (8 vs 5), and the lower-end $100 model doesn’t support the app, either. Given the limitations of the sensor and printer, you’re going to want as much flash as you can get. That’s too bad, because the cheap one comes in a dandy yellow color that is by far the most appealing to me.

The cameras should be available in a month or two at your local retailer or online shop.

from Gadgets – TechCrunch https://ift.tt/2CGrNji

via IFTTT

Ocean drone startup merger spawns Sofar, the DJI of the sea

What lies beneath the murky depths? SolarCity co-founder Peter Rive wants to help you and the scientific community find out. He’s just led a $7 million Series A for Sofar Ocean Technologies, a new startup formed from a merger he orchestrated between underwater drone maker OpenROV and sea sensor developer Spoondrift. Together, they’re teaming up their 1080p Trident drone and solar-powered Spotter sensor to let you collect data above and below the surface. They can help you shoot awesome video footage, track waves and weather, spot fishing and diving spots, inspect boats or infrastructure for damage, monitor acquaculture sites or catch smugglers.

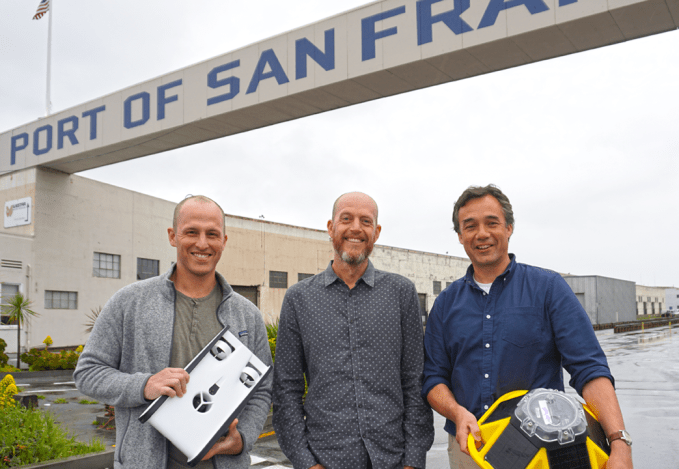

Sofar’s Trident drone (left) and Spotter sensor (right)

“Aerial drones give us a different perspective of something we know pretty well. Ocean drones give us a view at something we don’t really know at all,” former Spoondrift and now Sofar CEO Tim Janssen tells me. “The Trident drone was created for field usage by scientists and is now usable by anyone. This is pushing the barrier towards the unknown.”

But while Rive has a soft spot for the ecological potential of DIY ocean exploration, the sea is crowded with competing drones. There are more expensive professional research-focused devices like the Saildrone, DeepTrekker and SeaOtter-2, as well as plenty of consumer-level devices like the $800 Robosea Biki, $1,000 Fathom ONE and $5,000 iBubble. The $1,700 Sofar Trident, which requires a cord to a surface buoy to power its three hours of dive time and two meters per second speed, sits in the middle of the pack, but Sofar co-founder David Lang things Trident can win with simplicity, robustness and durability. The question is whether Sofar can become the DJI of the water, leading the space, or if it will become just another commoditized hardware maker drowning in knock-offs.

From left: David Lang (co-founder of OpenROV), Peter Rive (chairman of Sofar), and Tim Janssen (co-founder and CEO of Sofar)

Spoondrift launched in 2016 and raised $350,000 to build affordable ocean sensors that can produce climate-tracking data. “These buoys (Spotters) are surprisingly easy to deploy, very light and easy to handle, and can be lowered in the water by hand using a line. As a result, you can deploy them in almost any kind of conditions,” says Dr. Aitana Forcén-Vázquez of MetOcean Solutions.

OpenROV (it stands for Remotely Operated Vehicle) started seven years ago and raised $1.3 million in funding from True Ventures and National Geographic, which was also one of its biggest Trident buyers. “Everyone who has a boat should have an underwater drone for hull inspection. Any dock should have its own weather station with wind and weather sensors,” Sofar’s new chairman Rive declares.

Spotter could unlock data about the ocean at scale

Sofar will need to scale to accomplish Rive’s mission to get enough sensors in the sea to give us more data on the progress of climate change and other ecological issues. “We know very little about our oceans since we have so little data, because putting systems in the ocean is extremely expensive. It can cost millions for sensors and for boats,” he tells me. We gave everyone GPS sensors and cameras and got better maps. The ability to put low-cost sensors on citizens’ rooftops unlocked tons of weather forecasting data. That’s more feasible with Spotter, which costs $4,900 compared to $100,000 for some sea sensors.

Sofar hardware owners do not have to share data back to the startup, but Rive says many customers are eager to. They’ve requested better data portability so they can share with fellow researchers. The startup believes it can find ways to monetize that data in the future, which is partly what attracted the funding from Rive and fellow investors True Ventures and David Sacks’ Craft Ventures. The funding will build up that data business and also help Sofar develop safeguards to make sure its Trident drones don’t go where they shouldn’t. That’s obviously important, given London’s Gatwick airport shutdown due to a trespassing drone.

Spotter can relay weather conditions and other climate data to your phone

“The ultimate mission of the company is to connect humanity to the ocean as we’re mostly conservationists at heart,” Rive concludes. “As more commercialization and business opportunities arise, we’ll have to have conversations about whether those are directly benefiting the ocean. It will be important to have our moral compass facing in the right direction to protect the earth.”

from Gadgets – TechCrunch https://ift.tt/2FG3NyA

via IFTTT

This self-driving AI faced off against a champion racer (kind of)

Developments in the self-driving car world can sometimes be a bit dry: a million miles without an accident, a 10 percent increase in pedestrian detection range, and so on. But this research has both an interesting idea behind it and a surprisingly hands-on method of testing: pitting the vehicle against a real racing driver on a course.

To set expectations here, this isn’t some stunt, it’s actually warranted given the nature of the research, and it’s not like they were trading positions, jockeying for entry lines, and generally rubbing bumpers. They went separately, and the researcher, whom I contacted, politely declined to provide the actual lap times. This is science, people. Please!

The question which Nathan Spielberg and his colleagues at Stanford were interested in answering has to do with an autonomous vehicle operating under extreme conditions. The simple fact is that a huge proportion of the miles driven by these systems are at normal speeds, in good conditions. And most obstacle encounters are similarly ordinary.

If the worst should happen and a car needs to exceed these ordinary bounds of handling — specifically friction limits — can it be trusted to do so? And how would you build an AI agent that can do so?

The researchers’ paper, published today in the journal Science Robotics, begins with the assumption that a physics-based model just isn’t adequate for the job. These are computer models that simulate the car’s motion in terms of weight, speed, road surface, and other conditions. But they are necessarily simplified and their assumptions are of the type to produce increasingly inaccurate results as values exceed ordinary limits.

Imagine if such a simulator simplified each wheel to a point or line when during a slide it is highly important which side of the tire is experiencing the most friction. Such detailed simulations are beyond the ability of current hardware to do quickly or accurately enough. But the results of such simulations can be summarized into an input and output, and that data can be fed into a neural network — one that turns out to be remarkably good at taking turns.

The simulation provides the basics of how a car of this make and weight should move when it is going at speed X and needs to turn at angle Y — obviously it’s more complicated than that, but you get the idea. It’s fairly basic. The model then consults its training, but is also informed by the real-world results, which may perhaps differ from theory.

So the car goes into a turn knowing that, theoretically, it should have to move the wheel this much to the left, then this much more at this point, and so on. But the sensors in the car report that despite this, the car is drifting a bit off the intended line — and this input is taken into account, causing the agent to turn the wheel a bit more, or less, or whatever the case may be.

And where does the racing driver come into it, you ask? Well, the researchers needed to compare the car’s performance with a human driver who knows from experience how to control a car at its friction limits, and that’s pretty much the definition of a racer. If your tires aren’t hot, you’re probably going too slow.

The team had the racer (a “champion amateur race car driver,” as they put it) drive around the Thunderhill Raceway Park in California, then sent Shelley — their modified, self-driving 2009 Audi TTS — around as well, ten times each. And it wasn’t a relaxing Sunday ramble. As the paper reads:

Both the automated vehicle and human participant attempted to complete the course in the minimum amount of time. This consisted of driving at accelerations nearing 0.95g while tracking a minimum time racing trajectory at the the physical limits of tire adhesion. At this combined level of longitudinal and lateral acceleration, the vehicle was able to approach speeds of 95 miles per hour (mph) on portions of the track.

Even under these extreme driving conditions, the controller was able to consistently track the racing line with the mean path tracking error below 40 cm everywhere on the track.

In other words, while pulling a G and hitting 95, the self-driving Audi was never more than a foot and a half off its ideal racing line. The human driver had much wider variation, but this is by no means considered an error — they were changing the line for their own reasons.

“We focused on a segment of the track with a variety of turns that provided the comparison we needed and allowed us to gather more data sets,” wrote Spielberg in an email to TechCrunch. “We have done full lap comparisons and the same trends hold. Shelley has an advantage of consistency while the human drivers have the advantage of changing their line as the car changes, something we are currently implementing.”

Shelley showed far lower variation in its times than the racer, but the racer also posted considerably lower times on several laps. The averages for the segments evaluated were about comparable, with a slight edge going to the human.

Shelley showed far lower variation in its times than the racer, but the racer also posted considerably lower times on several laps. The averages for the segments evaluated were about comparable, with a slight edge going to the human.

This is pretty impressive considering the simplicity of the self-driving model. It had very little real-world knowledge going into its systems, mostly the results of a simulation giving it an approximate idea of how it ought to be handling moment by moment. And its feedback was very limited — it didn’t have access to all the advanced telemetry that self-driving systems often use to flesh out the scene.

The conclusion is that this type of approach, with a relatively simple model controlling the car beyond ordinary handling conditions, is promising. It would need to be tweaked for each surface and setup — obviously a rear-wheel-drive car on a dirt road would be different than front-wheel on tarmac. How best to create and test such models is a matter for future investigation, though the team seemed confident it was a mere engineering challenge.

The experiment was undertaken in order to pursue the still-distant goal of self-driving cars being superior to humans on all driving tasks. The results from these early tests are promising, but there’s still a long way to go before an AV can take on a pro head-to-head. But I look forward to the occasion.

from Gadgets – TechCrunch https://ift.tt/2HJ8t94

via IFTTT

FarmWise turns to Roush to build autonomous vegetable weeders

FarmWise wants robots to do the dirty part of farming: weeding. With that thought, the San Francisco-based startup enlisted the help of Michigan-based manufacturing and automotive company Roush to build prototypes of the self-driving robots. An early prototype is pictured above.

Financial details of the collaboration were not released.

The idea is these autonomous weeders will replace herbicides and save the grower on labor. By using high-precision weeding, the robotic farm hands can increase the yield of the crops by working day and night to remove unwanted plants and weeds. After all, herbicides are in part because weeding is a terrible job.

With Roush, FarmWise will build a dozen prototypes win 2019 with the intention of scaling to additional units in 2020. But why Michigan?

“Michigan is well-known throughout the world for its manufacturing and automotive industries, the advanced technology expertise and state-of-the-art manufacturing practices,” Thomas Palomares, FarmWise co-founder and CTO said. “These are many of the key ingredients we need to manufacture and test our machines. We were connected to Roush through support from PlanetM, and as a technology startup, joining forces with a large and well-respected legacy automaker is critical to support the scale of our manufacturing plan.”

Roush has a long history in Michigan as a leading manufacturing of high performance auto parts. More recently, the company has expanded its focus to using its manufacturing expertise elsewhere including robotics and alternative fuel system design.

“This collaboration showcases the opportunities that result from connecting startups like FarmWise with Michigan-based companies like Roush that bring their manufacturing know-how to making these concepts a reality,” said Trevor Pawl, group vice president of PlanetM, Pure Michigan Business Connect and International Trade at the Michigan Economic Development Corporation. “We are excited to see this collaboration come to fruition. It is a great example of how Michigan can bring together emerging companies globally seeking prototype and production support with our qualified manufacturing base in the state.”

FarmWise was founded in 2016 and has raised $5.7 million through a seed-stage investment including an investment from Playground Global. TechCrunch first saw FarmWise during Alchemist Accelerator’s batch 15 demo day.

from Gadgets – TechCrunch https://ift.tt/2TEdTDY

via IFTTT

Game streaming’s multi-industry melee is about to begin

Almost exactly 10 years ago, I was at GDC participating in a demo of a service I didn’t think could exist: OnLive. The company had promised high-definition, low-latency streaming of games at a time when real broadband was uncommon, mobile gaming was still defined by Bejeweled (though Angry Birds was about to change that), and Netflix was still mainly in the DVD-shipping business.

Although the demo went well, the failure of OnLive and its immediate successors to gain any kind of traction or launch beyond a few select markets indicated that while it may be in the future of gaming, streaming wasn’t in its present.

Well, now it’s the future. Bandwidth is plentiful, speeds are rising, games are shifting from things you buy to services you subscribe to, and millions prefer to pay a flat fee per month rather than worry about buying individual movies, shows, tracks, or even cheeses.

Consequently, as of this week — specifically as of Google’s announcement of Stadia on Tuesday — we see practically every major tech and gaming company attempting to do the same thing. Like the beginning of a chess game, the board is set or nearly so, and each company brings a different set of competencies and potential moves to the approaching fight. Each faces different challenges as well, though they share a few as a set.

Google and Amazon bring cloud-native infrastructure and familiarity online, but is that enough to compete with the gaming know-how of Microsoft, with its own cloud clout, or Sony, which made strategic streaming acquisitions and has a service up and running already? What of the third parties like Nvidia and Valve, publishers and storefronts that may leverage consumer trust and existing games libraries to jump start a rival? It’s a wide-open field, all right.

Before we examine them, however, it is perhaps worthwhile to entertain a brief introduction to the gaming space as it stands today and the trends that have brought it to this point.

from Gadgets – TechCrunch https://ift.tt/2FxJcLr

via IFTTT

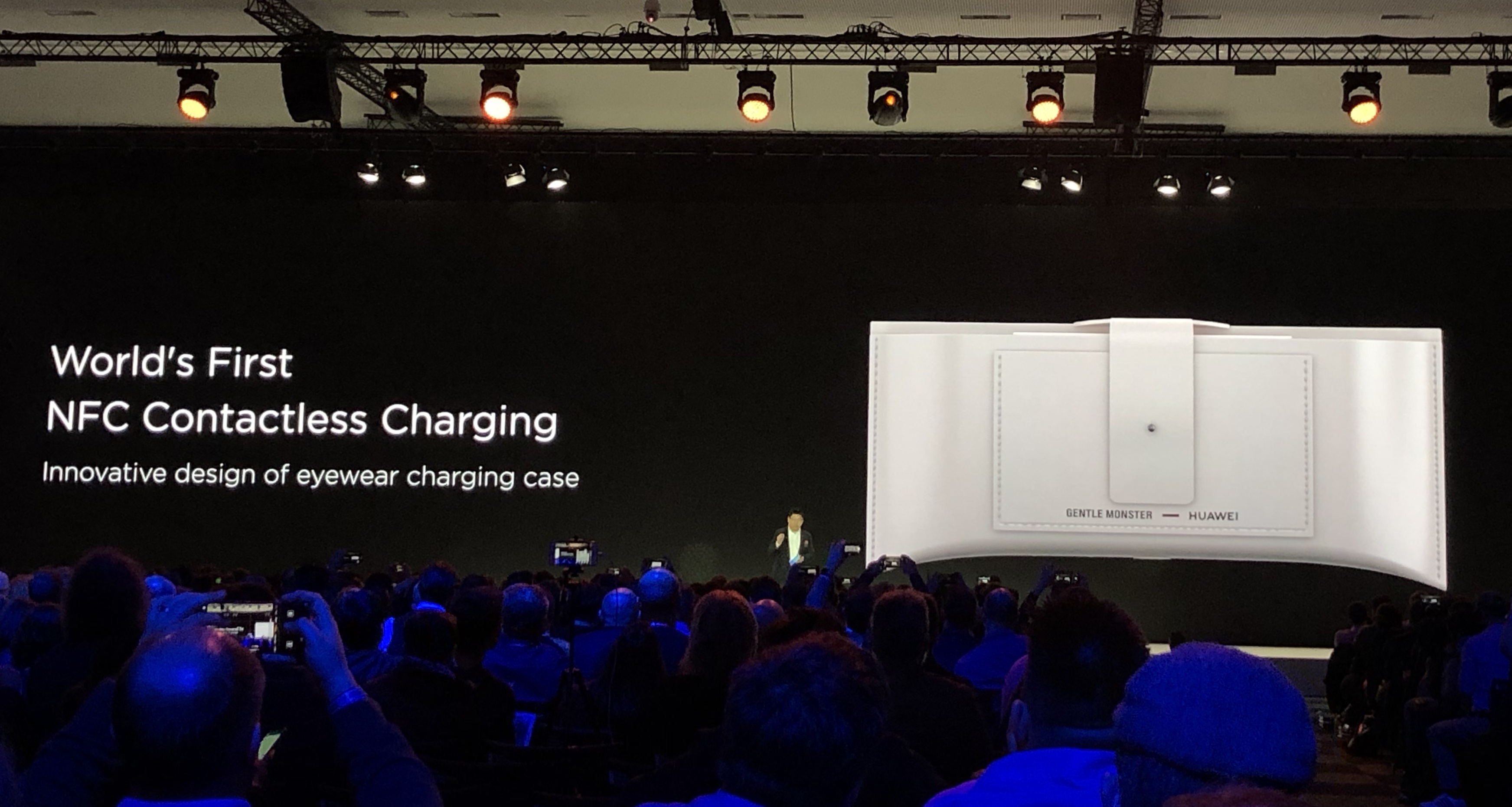

Huawei announces smart glasses in partnership with Gentle Monster

Huawei is launching connected glasses in partnership with Gentle Monster, a Korean sunglasses and optical glasses brand. There won’t be a single model, but a collection of glasses with integrated electronics.

Huawei is positioning the glasses as a sort of earbuds replacement, a device that lets you talk on the phone without putting anything in your ears. There’s no button on the device, but you can tap the temple of the glasses to answer a call for instance.

The antenna, charging module, dual microphone, chipset, speaker and battery are all integrated in the eyeglass temple. There are two microphones with beam-forming technology to understand what you’re saying even if the device is sitting on your nose.

There are stereo speakers positioned right above your ears. The company wants you to hear sound without disturbing your neighbors.

Interestingly, there’s no camera on the device. Huawei wants to avoid any privacy debate by skipping the camera altogether. Given that people have no issue with voice assistants and being surrounded by microphones, maybe people won’t be too suspicious.

The glasses come in a leather case with USB-C port at the bottom. It features wireless charging as well. Huawei teased the glasses at the P30 press conference in Paris, but the glasses won’t be available before July 2019.

from Gadgets – TechCrunch https://ift.tt/2UW0att

via IFTTT