Total Pageviews

Monday, 25 March 2019

Lutron Caséta Fan Control review: Smart control for your dumb ceiling fan

from Macworld https://ift.tt/2Hz9n7Y

via IFTTT

The best ways to watch March Madness without cable

from Macworld https://ift.tt/2OguOeE

via IFTTT

Amazon's All-new Kindle for $89.99 is priced for budget users

from Macworld https://ift.tt/2CuiUsW

via IFTTT

The WD My Passport 4TB now costs just $100 on Amazon, a 29% price drop

from Macworld https://ift.tt/2OhCxJg

via IFTTT

Apple could charge $9.99 per month each for HBO, Showtime and Starz

The Wall Street Journal has published a report on Apple’s media push. The company is about to unveil a new video streaming service and an Apple News subscription on Monday.

According to The WSJ, you’ll be able to subscribe to multiple content packages to increase the video library in a new app called Apple TV — it’s unclear if this app is going to replace the existing Apple TV app.

The service would work more or less like Amazon Prime Video Channels. Users will be able to subscribe to HBO, Showtime or Starz for a monthly fee. The WSJ says that these three partners would charge $9.99 per month each.

According to a previous report from CNBC, it differs from the existing Apple TV app as you won’t be redirected to another app. Everything will be available within a single app.

Controlling the experience from start to finish would be a great advantage for users. As many people now suffer from subscription fatigue, Apple would be able to centralize all your content subscriptions in a single app. You could tick and untick options depending on your needs.

But some companies probably don’t want to partner with Apple. It’s highly unlikely that you’ll find Netflix or Amazon Prime Video content in the Apple TV app. Those services also want to control the experience from start to finish. It’s also easier to gather data analytics when subscribers are using your own app.

Apple should open up the Apple TV app to other platforms. Just like you can play music on Apple Music on Android, a Sonos speaker or an Amazon Echo speaker, Apple is working on apps for smart TVs. The company has already launched iTunes Store apps on Samsung TVs, so it wouldn’t be a big surprise.

The company has also spent a ton of money on original content for its own service. Details are still thin on this front. Many of those shows might not be ready for Monday. Do you have to pay to access Apple’s content too? How much? We’ll find out on Monday.

When it comes to Apple News, The WSJ says that content from 200 magazines and newspapers will be available for $9.99 per month. The Wall Street Journal confirms a New York Times report that said that The Wall Street Journal was part of the subscription.

Apple is also monitoring the App Store to detect popular apps according to multiple metrics, The WSJ says. Sure, Apple runs the App Store. But Facebook faced a public outcry when people realized that Facebook was monitoring popular apps with a VPN app called Onavo.

from Gadgets – TechCrunch https://ift.tt/2TVUymv

via IFTTT

Apple could announce its gaming subscription service on Monday

Apple is about to announce some new services on Monday. While everybody expects a video streaming service as well as a news subscription, a new report from Bloomberg says that the company might also mention its gaming subscription.

Cheddar first reported back in January that Apple has been working on a gaming subscription. Users could pay a monthly subscription fee to access a library of games. We’re most likely talking about iOS games for the iPhone and iPad here.

Games are the most popular category on the App Store, so it makes sense to turn this category into a subscription business. And yet, most of them are free-to-play, ad-supported games. Apple doesn’t necessarily want to target those games in particular.

According to Bloomberg, the service will focus on paid games from third-party developers, such as Minecraft, NBA 2K games and the GTA franchise. Users would essentially pay to access this bundle of games. Apple would redistribute revenue to game developers based on how much time users spend within a game in particular.

It’s still unclear whether Apple will announce the service or launch it on Monday. The gaming industry is more fragmented than the movie and TV industry, so it makes sense to talk about the service publicly even if it’s not ready just yet.

from Gadgets – TechCrunch https://ift.tt/2TtMfsY

via IFTTT

The damage of defaults

Apple popped out a new pair of AirPods this week. The design looks exactly like the old pair of AirPods. Which means I’m never going to use them because Apple’s bulbous earbuds don’t fit my ears. Think square peg, round hole.

The only way I could rock AirPods would be to walk around with hands clamped to the sides of my head to stop them from falling out. Which might make a nice cut in a glossy Apple ad for the gizmo — suggesting a feeling of closeness to the music, such that you can’t help but cup; a suggestive visual metaphor for the aural intimacy Apple surely wants its technology to communicate.

But the reality of trying to use earbuds that don’t fit is not that at all. It’s just shit. They fall out at the slightest movement so you either sit and never turn your head or, yes, hold them in with your hands. Oh hai, hands-not-so-free-pods!

The obvious point here is that one size does not fit all — howsoever much Apple’s Jony Ive and his softly spoken design team believe they have devised a universal earbud that pops snugly in every ear and just works. Sorry, nope!

Hi @tim_cook, I fixed that sketch for you. Introducing #InPods — because one size doesn’t fit all

pic.twitter.com/jubagMnwjt

— Natasha (@riptari) March 20, 2019

A proportion of iOS users — perhaps other petite women like me, or indeed men with less capacious ear holes — are simply being removed from Apple’s sales equation where earbuds are concerned. Apple is pretending we don’t exist.

Sure we can just buy another brand of more appropriately sized earbuds. The in-ear, noise-canceling kind are my preference. Apple does not make ‘InPods’. But that’s not a huge deal. Well, not yet.

It’s true, the consumer tech giant did also delete the headphone jack from iPhones. Thereby depreciating my existing pair of wired in-ear headphones (if I ever upgrade to a 3.5mm-jack-less iPhone). But I could just shell out for Bluetooth wireless in-ear buds that fit my shell-like ears and carry on as normal.

Universal in-ear headphones have existed for years, of course. A delightful design concept. You get a selection of different sized rubber caps shipped with the product and choose the size that best fits.

Unfortunately Apple isn’t in the ‘InPods’ business though. Possibly for aesthetic reasons. Most likely because — and there’s more than a little irony here — an in-ear design wouldn’t be naturally roomy enough to fit all the stuff Siri needs to, y’know, fake intelligence.

Which means people like me with small ears are being passed over in favor of Apple’s voice assistant. So that’s AI: 1, non-‘standard’-sized human: 0. Which also, unsurprisingly, feels like shit.

I say ‘yet’ because if voice computing does become the next major computing interaction paradigm, as some believe — given how Internet connectivity is set to get baked into everything (and sticking screens everywhere would be a visual and usability nightmare; albeit microphones everywhere is a privacy nightmare… ) — then the minority of humans with petite earholes will be at a disadvantage vs those who can just pop in their smart, sensor-packed earbud and get on with telling their Internet-enabled surroundings to do their bidding.

Will parents of future generations of designer babies select for adequately capacious earholes so their child can pop an AI in? Let’s hope not.

We’re also not at the voice computing singularity yet. Outside the usual tech bubbles it remains a bit of a novel gimmick. Amazon has drummed up some interest with in-home smart speakers housing its own voice AI Alexa (a brand choice that has, incidentally, caused a verbal headache for actual humans called Alexa). Though its Echo smart speakers appear to mostly get used as expensive weather checkers and egg timers. Or else for playing music — a function that a standard speaker or smartphone will happily perform.

Certainly a voice AI is not something you need with you 24/7 yet. Prodding at a touchscreen remains the standard way of tapping into the power and convenience of mobile computing for the majority of consumers in developed markets.

The thing is, though, it still grates to be ignored. To be told — even indirectly — by one of the world’s wealthiest consumer technology companies that it doesn’t believe your ears exist.

Or, well, that it’s weighed up the sales calculations and decided it’s okay to drop a petite-holed minority on the cutting room floor. So that’s ‘ear meet AirPod’. Not ‘AirPod meet ear’ then.

But the underlying issue is much bigger than Apple’s (in my case) oversized earbuds. Its latest shiny set of AirPods are just an ill-fitting reminder of how many technology defaults simply don’t ‘fit’ the world as claimed.

Because if cash-rich Apple’s okay with promoting a universal default (that isn’t), think of all the less well resourced technology firms chasing scale for other single-sized, ill-fitting solutions. And all the problems flowing from attempts to mash ill-mapped technology onto society at large.

When it comes to wrong-sized physical kit I’ve had similar issues with standard office computing equipment and furniture. Products that seems — surprise, surprise! — to have been default designed with a 6ft strapping guy in mind. Keyboards so long they end up gifting the smaller user RSI. Office chairs that deliver chronic back-pain as a service. Chunky mice that quickly wrack the hand with pain. (Apple is a historical offender there too I’m afraid.)

The fixes for such ergonomic design failures is simply not to use the kit. To find a better-sized (often DIY) alternative that does ‘fit’.

But a DIY fix may not be an option when discrepancy is embedded at the software level — and where a system is being applied to you, rather than you the human wanting to augment yourself with a bit of tech, such as a pair of smart earbuds.

With software, embedded flaws and system design failures may also be harder to spot because it’s not necessarily immediately obvious there’s a problem. Oftentimes algorithmic bias isn’t visible until damage has been done.

And there’s no shortage of stories already about how software defaults configured for a biased median have ended up causing real-world harm. (See for example: ProPublica’s analysis of the COMPAS recidividism tool — software it found incorrectly judging black defendants more likely to offend than white. So software amplifying existing racial prejudice.)

Of course AI makes this problem so much worse.

Which is why the emphasis must be on catching bias in the datasets — before there is a chance for prejudice or bias to be ‘systematized’ and get baked into algorithms that can do damage at scale.

The algorithms must also be explainable. And outcomes auditable. Transparency as disinfectant; not secret blackboxes stuffed with unknowable code.

Doing all this requires huge up-front thought and effort on system design, and an even bigger change of attitude. It also needs massive, massive attention to diversity. An industry-wide championing of humanity’s multifaceted and multi-sized reality — and to making sure that’s reflected in both data and design choices (and therefore the teams doing the design and dev work).

You could say what’s needed is a recognition there’s never, ever a one-sized-fits all plug.

Indeed, that all algorithmic ‘solutions’ are abstractions that make compromises on accuracy and utility. And that those trade-offs can become viciously cutting knives that exclude, deny, disadvantage, delete and damage people at scale.

Expensive earbuds that won’t stay put is just a handy visual metaphor.

And while discussion about the risks and challenges of algorithmic bias has stepped up in recent years, as AI technologies have proliferated — with mainstream tech conferences actively debating how to “democratize AI” and bake diversity and ethics into system design via a development focus on principles like transparency, explainability, accountability and fairness — the industry has not even begun to fix its diversity problem.

It’s barely moved the needle on diversity. And its products continue to reflect that fundamental flaw.

Stanford just launched their Institute for Human-Centered Artificial Intelligence (@StanfordHAI) with great fanfare. The mission: "The creators and designers of AI must be broadly representative of humanity."

121 faculty members listed.

Not a single faculty member is Black. pic.twitter.com/znCU6zAxui

— Chad Loder ❁ (@chadloder) March 21, 2019

Many — if not most — of the tech industry’s problems can be traced back to the fact that inadequately diverse teams are chasing scale while lacking the perspective to realize their system design is repurposing human harm as a de facto performance measure. (Although ‘lack of perspective’ is the charitable interpretation in certain cases; moral vacuum may be closer to the mark.)

As WWW creator, Sir Tim Berners-Lee, has pointed out, system design is now society design. That means engineers, coders, AI technologists are all working at the frontline of ethics. The design choices they make have the potential to impact, influence and shape the lives of millions and even billions of people.

And when you’re designing society a median mindset and limited perspective cannot ever be an acceptable foundation. It’s also a recipe for product failure down the line.

The current backlash against big tech shows that the stakes and the damage are very real when poorly designed technologies get dumped thoughtlessly on people.

Life is messy and complex. People won’t fit a platform that oversimplifies and overlooks. And if your excuse for scaling harm is ‘we just didn’t think of that’ you’ve failed at your job and should really be headed out the door.

Because the consequences for being excluded by flawed system design are also scaling and stepping up as platforms proliferate and more life-impacting decisions get automated. Harm is being squared. Even as the underlying industry drum hasn’t skipped a beat in its prediction that everything will be digitized.

Which means that horribly biased parole systems are just the tip of the ethical iceberg. Think of healthcare, social welfare, law enforcement, education, recruitment, transportation, construction, urban environments, farming, the military, the list of what will be digitized — and of manual or human overseen processes that will get systematized and automated — goes on.

Software — runs the industry mantra — is eating the world. That means badly designed technology products will harm more and more people.

But responsibility for sociotechnical misfit can’t just be scaled away as so much ‘collateral damage’.

So while an ‘elite’ design team led by a famous white guy might be able to craft a pleasingly curved earbud, such an approach cannot and does not automagically translate into AirPods with perfect, universal fit.

It’s someone’s standard. It’s certainly not mine.

We can posit that a more diverse Apple design team might have been able to rethink the AirPod design so as not to exclude those with smaller ears. Or make a case to convince the powers that be in Cupertino to add another size choice. We can but speculate.

What’s clear is the future of technology design can’t be so stubborn.

It must be radically inclusive and incredibly sensitive. Human-centric. Not locked to damaging defaults in its haste to impose a limited set of ideas.

Above all, it needs a listening ear on the world.

Indifference to difference and a blindspot for diversity will find no future here.

from Gadgets – TechCrunch https://ift.tt/2OtNU1b

via IFTTT

Gates-backed Lumotive upends lidar conventions using metamaterials

Pretty much every self-driving car on the road, not to mention many a robot and drone, uses lidar to sense its surroundings. But useful as lidar is, it also involves physical compromises that limit its capabilities. Lumotive is a new company with funding from Bill Gates and Intellectual Ventures that uses metamaterials to exceed those limits, perhaps setting a new standard for the industry.

The company is just now coming out of stealth, but it’s been in the works for a long time. I actually met with them back in 2017 when the project was very hush-hush and operating under a different name at IV’s startup incubator. If the terms “metamaterials” and “Intellectual Ventures” tickle something in your brain, it’s because the company has spawned several startups that use intellectual property developed there, building on the work of materials scientist David Smith.

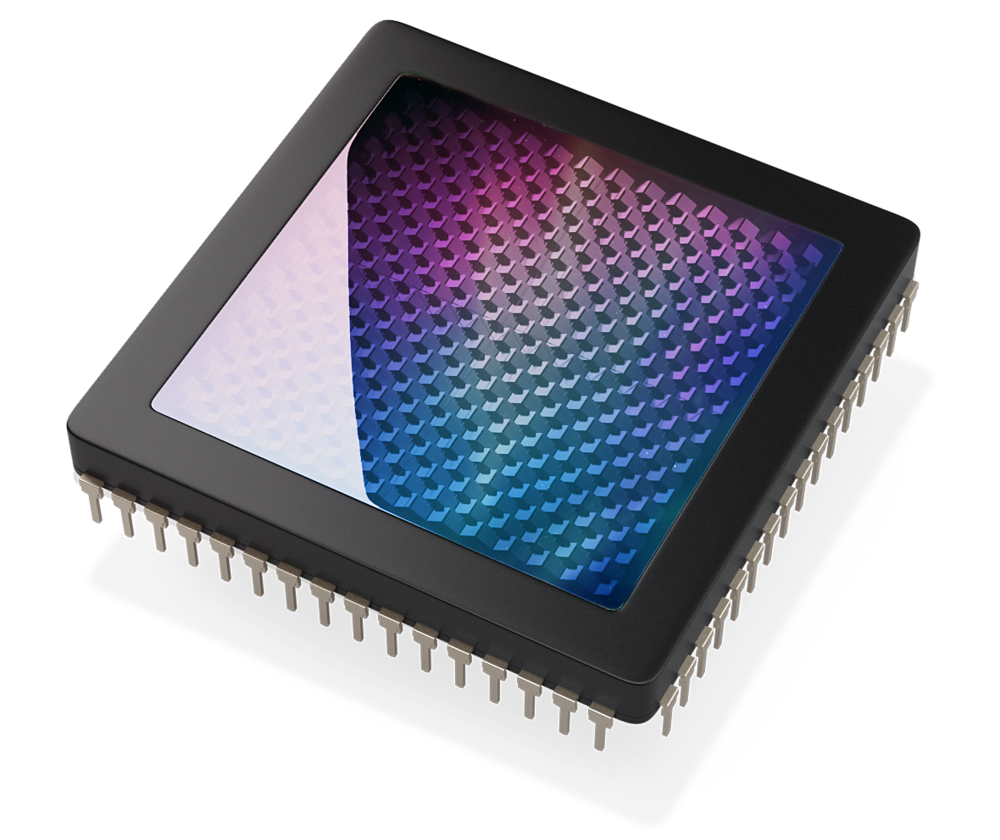

Metamaterials are essentially specially engineered surfaces with microscopic structures — in this case, tunable antennas — embedded in them, working as a single device.

Echodyne is another company that used metamaterials to great effect, shrinking radar arrays to pocket size by engineering a radar transceiver that’s essentially 2D and can have its beam steered electronically rather than mechanically.

The principle works for pretty much any wavelength of electromagnetic radiation — i.e. you could use X-rays instead of radio waves — but until now no one has made it work with visible light. That’s Lumotive’s advance, and the reason it works so well.

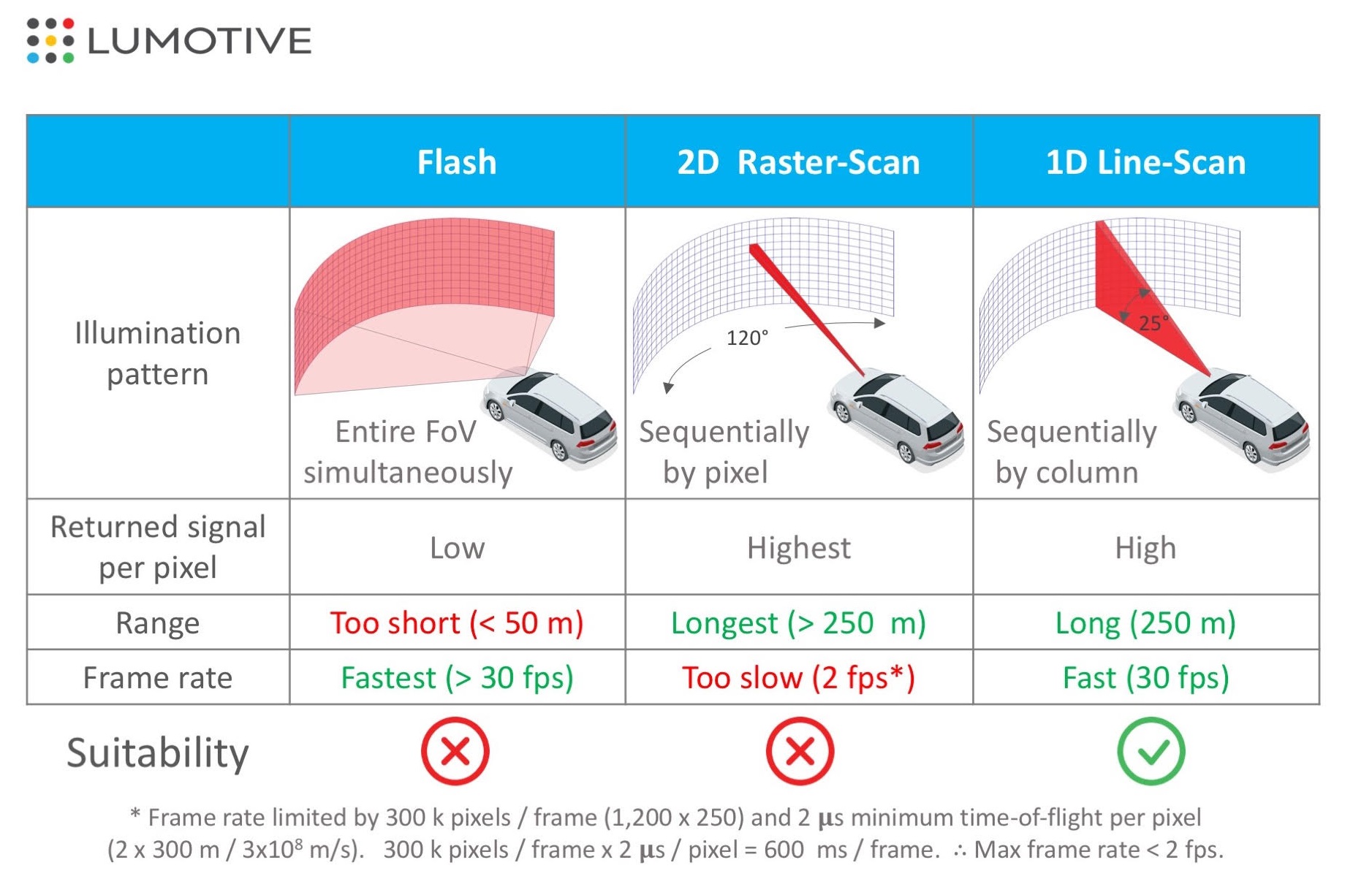

Flash, 2D and 1D lidar

Lidar basically works by bouncing light off the environment and measuring how and when it returns; this can be accomplished in several ways.

Flash lidar basically sends out a pulse that illuminates the whole scene with near-infrared light (905 nanometers, most likely) at once. This provides a quick measurement of the whole scene, but limited distance as the power of the light being emitted is limited.

2D or raster scan lidar takes an NIR laser and plays it over the scene incredibly quickly, left to right, down a bit, then does it again, again and again… scores or hundreds of times. Focusing the power into a beam gives these systems excellent range, but similar to a CRT TV with an electron beam tracing out the image, it takes rather a long time to complete the whole scene. Turnaround time is naturally of major importance in driving situations.

1D or line scan lidar strikes a balance between the two, using a vertical line of laser light that only has to go from one side to the other to complete the scene. This sacrifices some range and resolution but significantly improves responsiveness.

Lumotive offered the following diagram, which helps visualize the systems, although obviously “suitability” and “too short” and “too slow” are somewhat subjective:

The main problem with the latter two is that they rely on a mechanical platform to actually move the laser emitter or mirror from place to place. It works fine for the most part, but there are inherent limitations. For instance, it’s difficult to stop, slow or reverse a beam that’s being moved by a high-speed mechanism. If your 2D lidar system sweeps over something that could be worth further inspection, it has to go through the rest of its motions before coming back to it… over and over.

The main problem with the latter two is that they rely on a mechanical platform to actually move the laser emitter or mirror from place to place. It works fine for the most part, but there are inherent limitations. For instance, it’s difficult to stop, slow or reverse a beam that’s being moved by a high-speed mechanism. If your 2D lidar system sweeps over something that could be worth further inspection, it has to go through the rest of its motions before coming back to it… over and over.

This is the primary advantage offered by a metamaterial system over existing ones: electronic beam steering. In Echodyne’s case the radar could quickly sweep over its whole range like normal, and upon detecting an object could immediately switch over and focus 90 percent of its cycles tracking it in higher spatial and temporal resolution. The same thing is now possible with lidar.

Imagine a deer jumping out around a blind curve. Every millisecond counts because the earlier a self-driving system knows the situation, the more options it has to accommodate it. All other things being equal, an electronically steered lidar system would detect the deer at the same time as the mechanically steered ones, or perhaps a bit sooner; upon noticing this movement, it could not just make more time for evaluating it on the next “pass,” but a microsecond later be backing up the beam and specifically targeting just the deer with the majority of its resolution.

Targeted illumination would also improve the estimation of direction and speed, further improving the driving system’s knowledge and options — meanwhile, the beam can still dedicate a portion of its cycles to watching the road, requiring no complicated mechanical hijinks to do so. Meanwhile, it has an enormous aperture, allowing high sensitivity.

In terms of specs, it depends on many things, but if the beam is just sweeping normally across its 120×25 degree field of view, the standard unit will have about a 20Hz frame rate, with a 1000×256 resolution. That’s comparable to competitors, but keep in mind that the advantage is in the ability to change that field of view and frame rate on the fly. In the example of the deer, it may maintain a 20Hz refresh for the scene at large but concentrate more beam time on a 5×5 degree area, giving it a much faster rate.

Meta doesn’t mean mega-expensive

Naturally one would assume that such a system would be considerably more expensive than existing ones. Pricing is still a ways out — Lumotive just wanted to show that its tech exists for now — but this is far from exotic tech.

The team told me in an interview that their engineering process was tricky specifically because they designed it for fabrication using existing methods. It’s silicon-based, meaning it can use cheap and ubiquitous 905nm lasers rather than the rarer 1550nm, and its fabrication isn’t much more complex than making an ordinary display panel.

The team told me in an interview that their engineering process was tricky specifically because they designed it for fabrication using existing methods. It’s silicon-based, meaning it can use cheap and ubiquitous 905nm lasers rather than the rarer 1550nm, and its fabrication isn’t much more complex than making an ordinary display panel.

CTO and co-founder Gleb Akselrod explained: “Essentially it’s a reflective semiconductor chip, and on the surface we fabricate these tiny antennas to manipulate the light. It’s made using a standard semiconductor process, then we add liquid crystal, then the coating. It’s a lot like an LCD.”

An additional bonus of the metamaterial basis is that it works the same regardless of the size or shape of the chip. While an inch-wide rectangular chip is best for automotive purposes, Akselrod said, they could just as easily make one a quarter the size for robots that don’t need the wider field of view, or a larger or custom-shape one for a specialty vehicle or aircraft.

The details, as I said, are still being worked out. Lumotive has been working on this for years and decided it was time to just get the basic information out there. “We spend an inordinate amount of time explaining the technology to investors,” noted CEO and co-founder Bill Colleran. He, it should be noted, is a veteran innovator in this field, having headed Impinj most recently, and before that was at Broadcom, but is perhaps is best known for being CEO of Innovent when it created the first CMOS Bluetooth chip.

Right now the company is seeking investment after running on a 2017 seed round funded by Bill Gates and IV, which (as with other metamaterial-based startups it has spun out) is granting Lumotive an exclusive license to the tech. There are partnerships and other things in the offing, but the company wasn’t ready to talk about them; the product is currently in prototype but very showable form for the inevitable meetings with automotive and tech firms.

from Gadgets – TechCrunch https://ift.tt/2OmYw1r

via IFTTT

This is what the Huawei P30 will look like

You can already find many leaked photos of Huawei’s next flagship device — the P30 and P30 Pro. The company is set to announce the new product at an event in Paris next week. So here’s what you should expect.

Reliable phone leaker Evan Blass tweeted many different photos of the new devices in three different tweets:

— Evan Blass (@evleaks) March 20, 2019

As you can see, both devices feature three cameras on the back of the device. The notch is getting smaller and now looks like a teardrop. Compared to the P20 and P20 Pro, the fingerprint sensor is gone. It looks like Huawei is going to integrate the fingerprint sensor in the display just like Samsung did with the Samsung Galaxy S10.

Also, mysmartprice shared some ads with some specifications. The P30 Pro will have a 10x hybrid zoom while the P30 will have a 5x hybrid zoom — it’s unclear how it’ll work to combine a hardware zoom with a software zoom. Huawei has been doing some good work on the camera front, so this is going to be a key part of next week’s presentation.

For the first time, Huawei will put wireless charging in its flagship device — it’s about time. And it looks like the P30 Pro will adopt a curved display for the first time, as well. I’ll be covering the event next week, so stay tuned.

from Gadgets – TechCrunch https://ift.tt/2Wecnde

via IFTTT

Apple announces new AirPods

Apple has just announced the second-generation AirPods.

The new AirPods are fitted with the H1 chip, which is meant to offer performance efficiencies, faster connect times between the pods and your devices and the ability to ask for Siri hands-free with the “Hey Siri” command.

Because of its performance efficiency, the H1 chip also allows for the AirPods to offer 50 percent more talk time using the headphones. Switching between devices is 2x faster than the previous-generation AirPods, according to Apple.

Here’s what Phil Schiller had to say in the press release:

AirPods delivered a magical wireless experience and have become one of the most beloved products we’ve ever made. They connect easily with all of your devices, and provide crystal clear sound and intuitive, innovative control of your music and audio. The world’s best wireless headphones just got even better with the new AirPods. They are powered by the new Apple-designed H1 chip which brings an extra hour of talk time, faster connections, hands-free ‘Hey Siri’ and the convenience of a new wireless battery case.

The second-gen AirPods are available with the standard wired charging case ($159), or a new Wireless Charging Case ($199). A standalone wireless charging case is also available for purchase ($79). We’ve reached out to Apple to ask if the wireless case is backward compatible with first-gen AirPods and will update the post once we know more.

The second-gen AirPods are available with the standard wired charging case ($159), or a new Wireless Charging Case ($199). A standalone wireless charging case is also available for purchase ($79). We’ve reached out to Apple to ask if the wireless case is backward compatible with first-gen AirPods and will update the post once we know more.

Update: Turns out, the wireless charging case works “with all generations of AirPods,” according to the Apple website listing.

Two times faster switching and 50 percent more talk time might sound like small perks for a relatively expensive accessory, but I’ve found that one of my biggest pain points with the AirPods are the lack of voice control and the time it takes to switch devices. The introduction of “Hey Siri” alongside the new H1 chip, as well as much faster switching between devices, should noticeably elevate the user experience with these new AirPods.

After a search through the Apple website, it appears that old AirPods are no longer available for sale.

The new AirPods are available to order today from Apple.com and the Apple Store app, with in-store availability beginning next week.

from Gadgets – TechCrunch https://ift.tt/2U3ZIfs

via IFTTT

Markforged raises $82 million for its industrial 3D printers

3D printer manufacturer Markforged has raised another round of funding. Summit Partners is leading the $82 million Series D round with Matrix Partners, Microsoft’s Venture Arm, Next47 and Porsche SE also participating.

When you think about 3D printers, chances are you’re thinking about microwave-sized, plastic-focused 3D printers for hobbyists. Markforged is basically at the other end of the spectrum, focused on expensive 3D printers for industrial use cases.

In addition to increased precision, Markforged can manufacture parts in strong materials, such as carbon fiber, kevlar or stainless steel. And it can greatly impacts your manufacturing process.

For instance, you can prototype your next products with a Markforged printer. Instead of getting sample parts from third-party companies, you can manufacture your parts in house. If you’re not going to sell hundreds of thousands of products, you could even consider using Markforged to produce parts for your commercial products.

If you work in an industry that requires a ton of different parts but don’t need a lot of inventory, you could also consider using a 3D printer to manufacture parts whenever you need them.

Markforged has a full-stack approach and controls everything from the 3D printer, software and materials. Once you’re done designing your CAD 3D model, you can send it to your fleet of printers. The company’s application also lets you manage different versions of the same part and collaborate with other people.

According to the company’s website, Markforged has attracted 10,000 customers, such as Canon, Microsoft, Google, Amazon, General Motors, Volkswagen and Adidas. The company has shipped 2,500 printers in 2018.

With today’s funding round, the company plans to do more of the same — you can expect mass production printers and more materials in the future. Eventually, Markforged wants to make it cheaper to manufacture parts at scale instead of producing those parts through other means.

Correction: An earlier version of this post said that Markforged had 4,000 customers. That was an outdated number, the company now has 10,000 customers.

from Gadgets – TechCrunch https://ift.tt/2YccebZ

via IFTTT

The 9 biggest questions about Google’s Stadia game streaming service

Google’s Stadia is an impressive piece of engineering to be sure: Delivering high definition, high framerate, low latency video to devices like tablets and phones is an accomplishment in itself. But the game streaming services faces serious challenges if it wants to compete with the likes of Xbox and PlayStation, or even plain old PCs and smartphones.

Here are our nine biggest questions about what the service will be and how it’ll work.

1. What’s the game selection like?

We saw Assassin’s Creed: Odyssey (a lot) and Doom: Eternal, and a few other things running on Stadia, but otherwise Google’s presentation was pretty light on details as far as what games exactly we can expect to see on there.

It’s not an easy question to answer, since this isn’t just a question of “all PC games,” or “all games from these 6 publishers.” Stadia requires a game be ported, or partly recoded to fit its new environment — in this case a Linux-powered PC. That’s not unusual, but it isn’t trivial either.

It’s not an easy question to answer, since this isn’t just a question of “all PC games,” or “all games from these 6 publishers.” Stadia requires a game be ported, or partly recoded to fit its new environment — in this case a Linux-powered PC. That’s not unusual, but it isn’t trivial either.

Porting is just part of the job for a major studio like Ubisoft, which regularly publishes on multiple platforms simultaneously, but for a smaller developer or a more specialized game, it’s not so straightforward. Jade Raymond will be in charge of both first-party games just for Stadia as well as developer relations; she said that the team will be “working with external developers to bring all of the bleeding edge Google technology you have seen today available to partner studios big and small.”

What that tells me is that every game that comes to Stadia will require special attention. That’s not a good sign for selection, but it does suggest that anything available on it will run well.

2. What will it cost?

Perhaps the topic Google avoided the most was what the heck the business model is for this whole thing.

Do you pay a subscription fee? Is it part of YouTube or maybe YouTube Red? Do they make money off sales of games after someone plays the instant demo? Is it free for an hour a day? Will it show ads every 15 minutes? Will publishers foot the bill as part of their normal marketing budget? No one knows!

It’s a difficult play because the most obvious way to monetize also limits the product’s exposure. Asking people to subscribe adds a lot of friction to a platform where the entire idea is to get you playing within 5 seconds.

It’s a difficult play because the most obvious way to monetize also limits the product’s exposure. Asking people to subscribe adds a lot of friction to a platform where the entire idea is to get you playing within 5 seconds.

Putting ads in is an easy way to let people jump in and have it be monetized a small amount. You could even advertise the game itself and offer a one-time 10 percent off coupon or something. Then mention that YouTube Red subscribers don’t see ads at all.

Sounds reasonable, but Google didn’t mention anything like this at all. We’ll probably hear more later this year closer to launch, but it’s hard to judge the value of the service when we have no idea what it will cost.

3. What about iOS devices?

Google and Apple are bitter rivals in a lot of ways, but it’s hard to get around the fact that iPhone owners tend to be the most lucrative mobile customers. Yet there were none in the live demo and no availability mentioned for iOS.

Depending on its business model, Google may have locked itself out of the App Store. Apple doesn’t let you essentially run a store within its store (as we have seen in cases like Amazon and Epic) and if that’s part of the Stadia offering, it’s not going to fly.

Depending on its business model, Google may have locked itself out of the App Store. Apple doesn’t let you essentially run a store within its store (as we have seen in cases like Amazon and Epic) and if that’s part of the Stadia offering, it’s not going to fly.

An app that just lets you play might be a possibility, but since none was mentioned, it’s possible Google is using Stadia as a platform exclusive to draw people to Pixel devices. That kind of puts a limit on the pitch that you can play on devices you already have.

4. What about games you already own?

A big draw of game streaming is to buy a game once and play it anywhere. Sometimes you want to play the big awesome story parts on your 60-inch TV in surround sound, but do a little inventory and quest management on your laptop at the cafe. That’s what systems like Steam Link offer.

Epic Games is taking on Steam with its own digital game store, which includes higher take-home revenue rates for developers.

But Google didn’t mention how its ownership system will work, or whether there would be a way to play games you already own on the service. This is a big consideration for many gamers.

It was mentioned that there would be cross platform play and perhaps even the ability to bring saves to other platforms, but how that would work was left to the imagination. Frankly I’m skeptical.

Letting people show they own a game and giving them access to it is a recipe for scamming and trouble, but not supporting it is missing out on a huge application for the service. Google’s caught between a rock and a hard place here.

5. Can you really convert viewers to players?

This is a bit more of an abstract question, but it comes from the basic idea that people specifically come to YouTube and Twitch to watch games, not play them. Mobile viewership is huge because streams are a great way to kill time on a train or bus ride, or during a break at school. These viewers often don’t want to play at those times, and couldn’t if they did want to!

So the question is, are there really enough people watching gaming content on YouTube who will actually actively switch to playing just like that?

To be fair, the idea of a game trailer that lets you play what you just saw five seconds later is brilliant. I’m 100 percent on board there. But people don’t watch dozens of hours of game trailers a week — they watch famous streamers play Fortnite and PUBG and do speedruns of Dark Souls and Super Mario Bros 1. These audiences are much harder to change into players.

The potential of joining a game with a streamer, or affecting them somehow, or picking up at the spot they left off, to try fighting a boss on your own or seeing how their character controls, is a good one, but making that happen goes far, far beyond the streaming infrastructure Google has created here. It involves rewriting the rules on how games are developed and published. We saw attempts at this from Beam, later acquired by Microsoft, but it never really bloomed.

Streaming is a low-commitment, passive form of entertainment, which is kind of why it’s so popular. Turning that into an active, involved form of entertainment is far from straightforward.

6. How’s the image quality?

Games these days have mind-blowing graphics. I sure had a lot of bad things to say about Anthem, but when it came to looks that game was a showstopper. And part of what made it great were the tiny details in textures and subtle gradations of light that are only just recently possible with advances in shaders, volumetric fog, and so on. Will those details really come through in a stream?

Don’t get me wrong. I know a 1080p stream looks decent. But the simple fact is that high-efficiency HD video compression reduces detail in a noticeable way. You just can’t perfectly recreate an image if you have to send it 60 times per second with only a few milliseconds to compress and decompress it. It’s how image compression works.

For some people this won’t be a big deal. They really might not care about the loss of some visual fidelity — the convenience factor may outweigh it by a ton. But there are others for whom it may be distracting, those who have invested in a powerful gaming console or PC that gives them better detail at higher framerates than Stadia can possibly offer.

It’s not apples to apples but Google has to consider these things, especially when the difference is noticeable enough that game developers and publishers start to note that a game is “best experienced locally” or something like that.

7. Will people really game on the go?

I don’t question whether people play games on mobile. That’s one of the biggest businesses in the world. But I’m not sure that people want to play Assassin’s Creed: Odyssey on their iPa… I mean, Pixel Slate. Let alone their smartphone.

Games on phones and tablets are frequently time-killers driven by addictive short-duration game sessions. Even the bigger, more console-like games on mobile usually aim for shorter play sessions. That may be changing in some ways for sure but it’s a consideration, and AAA console games really just aren’t designed for 5-10 minute gaming sessions.

Games on phones and tablets are frequently time-killers driven by addictive short-duration game sessions. Even the bigger, more console-like games on mobile usually aim for shorter play sessions. That may be changing in some ways for sure but it’s a consideration, and AAA console games really just aren’t designed for 5-10 minute gaming sessions.

Add to that that you have to carry around what looks like a fairly bulky controller and this becomes less of an option for things like planes, cafes, subway rides, and so on. Even if you did bring it, could you be sure you’ll get the 10 or 20 Mbps you’ll need to get that 60FPS video rate? And don’t say 5G. If anyone says 5G again after the last couple months I’m going to lose it.

Naturally the counterpoint here is Nintendo’s fabulously successful and portable Switch. But the Switch plays both sides, providing a console-like experience on the go that makes sense because of its frictionless game state saving and offline operation. Stadia doesn’t seem to offer anything like that. In some ways it could be more compelling, but it’s a hard sell right now.

8. How will multiplayer work?

Obviously multiplayer gaming is huge right now and likely will be forever, so the Stadia will for sure support multiplayer one way or another. But multiplayer is also really complicated.

It used to be that someone just picked up the second controller and played Luigi. Now you have friend codes, accounts, user IDs, automatic matchmaking, all kinds of junk. If I want to play The Division 2 with a friend via Stadia, how does that work? Can I use my existing account? How do I log in? Are there IP issues and will the whole rigmarole of the game running in some big server farm set off cheat detectors or send me a security warning email? What if two people want to play a game locally?

Many of the biggest gaming properties in the world are multiplayer focused, and without a very, very clear line on this it’s going to turn a lot of people off. The platform might be great for it — but they have some convincing to do.

9. Stadia?

Branding is hard. Launching a product that aims to reach millions and giving it a name that not only represents it well but isn’t already taken is hard. But that said… Stadia?

I guess the idea is that each player is kind of in a stadium of their own… or that they’re in a stadium where Ninja is playing, and then they can go down to join? Certainly Stadia is more distinctive than stadium and less copyright-fraught than Colosseum or the like. Arena is probably out too.

If only Google already owned something that indicated gaming but was simple, memorable, and fit with its existing “Google ___” set of consumer-focused apps, brands, and services.

from Gadgets – TechCrunch https://ift.tt/2W4pca6

via IFTTT

Here’s how you’ll access Google’s Stadia cloud gaming service

Google isn’t launching a gaming console. The company is launching a service instead: Stadia. You’ll be able to run a game on a server and stream the video feed to your device. You won’t need to buy new hardware to access Stadia, but Stadia won’t be available on all devices from day one.

“With Google, your games will be immediately discoverable by 2 billion people on a Chrome browser, Chromebook, Chromecast, Pixel device. And we have plans to support more browsers and platforms over time,” Google CEO Sundar Pichai said shortly after opening GDC 2019.

As you can see, the Chrome browser will be the main interface to access the service on a laptop or desktop computer. The company says that you’ll be able to play with your existing controller. So if you have a PlayStation 4, Xbox One or Nintendo Switch controller, that should work just fine. Google is also launching its own controller.

As expected, if you’re using a Chromecast with your TV, you’ll be able to turn it into a Stadia machine. Only the latest Chromecast supports Bluetooth, so let’s see if you’ll need a recent model to play with your existing controller. Google’s controller uses Wi-Fi, so that should theoretically work with older Chromecast models.

On mobile, it sounds like Google isn’t going to roll out its service to all Android devices from day one. Stadia could be limited to Pixel phones and tablets at first. But there’s no reason Google would not ship Stadia to all Android devices later.

Interestingly, Google didn’t mention Apple devices at all. So if you have an iPhone or an iPad, don’t hold your breath. Apple doesn’t let third-party developers sell digital content in their apps without going through the App Store. This will create a challenge for Google.

Stadia isn’t available just yet. It’ll launch later this year. As you can see, there are many outstanding questions after the conference. Google is entering a new industry and it’s going to take some time to figure out the business model and the distribution model.

from Gadgets – TechCrunch https://ift.tt/2Tnv8JG

via IFTTT

Google is creating its own first-party game studio

Google unveiled its cloud gaming platform, Stadia, at a conference in San Francisco. While most of the conference showcased well-known games you can play on your PC, Xbox One or PlayStation 4, the company also announced it is launching its own first-party game studio, Stadia Games and Entertainment.

Jade Raymond is going to head the studio and was here to announce the first details. The company is going to work on exclusive games for Stadia. But the studio will have a bigger role than that.

“I’m excited to announce that, as the head of Stadia Games and Entertainment, I will not only be bringing first-party game studios to reimagine the next generation of games,” Raymond said. “Our team will also be working with external developers to bring all of the bleeding-edge Google technology you have seen today available to partner studios big and small.”

Raymond has been working in the video game industry for more than 15 years. In particular, she was a producer for Ubisoft in Montreal during the early days of the Assassin’s Creed franchise. She also worked on Watch Dogs before leaving Ubisoft for Electronic Arts.

She formed Motive Studios for Electronic Arts and worked with Visceral Games, another Electronic Arts game studio. She was working on an untitled single-player Star Wars video game, but Visceral Games closed in 2017 and the project has been canceled since then.

According to Google, 100 studios around the world have already received development hardware for Stadia. There are more than 1,000 engineers and creatives working on Stadia games or ports right now.

Stadia uses a custom AMD GPU and a Linux operating system. Games that are already compatible with Linux should be easy to port to Stadia. But there might be more work for studios focused on Windows games.

According to Stadia.dev, the cloud instance runs on Debian and features Vulkan. The machine runs an x86 CPU with a “custom AMD GPU with HBM2 memory and 56 compute units capable of 10.7 teraflops.” That sounds a lot like the AMD Radeon RX Vega 56, a relatively powerful GPU, but something not as powerful as what you can find in high-end gaming PCs today.

Google will be running a program called Stadia Partners to help third-party developers understand this new platform.

from Gadgets – TechCrunch https://ift.tt/2TdEbg9

via IFTTT